I’m using multiple monitors and sometimes it would be useful to have hotkeys that would position the active window to certain place on certain screen. I took a quick look at ready made software but those did not seem to solve my specific problem.

Luckily I found Autohotkey which allows binding of various actions to certain keys. The actions are written using a scripting language.

A number of scripts for doing this kind of window moving already exist, but instead of finding out which one is suitable for my needs, I decided to roll out my own. My solution is pretty limited as it relies on hardcoded coordinates. A number of other scripts provide functions for determining the windows sizes and calculating the positions.

In order to use the script, install Autohotkey and copy the script for example to the autohotkey.ahk file that is generated as sample (you can replace the other content). This has been tested on Windows 8.

; Simple Autohotkey script for moving around windows on

; three monitors

;

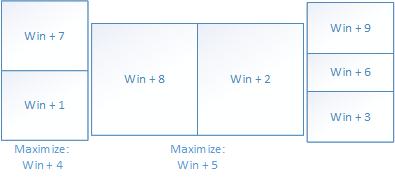

; The screens I'm using (from left to right)

; - 1200x1920 (24", portrait)

;- 2560x1600 (30", landscape, main screen)

; - 1200x1920 (24", portrait)

;

; Left monitor is split to upper and lower half

; Center monitor is split to left and right halfs

; Right monitor is split vertically to three parts

;

; Win+numpad combinations are used to position the active

; window on above mentioned locations. Coordinates are

; hardcoded. (0,0) is the top-left corner of the main screen.

; The coordinates on other screens depend on how they are

; positoned on the Windows monitor configuration (you can adjust

; the vertical position of the screen there). Autohotkey

; Window Spy is a good tool for finding the coordinates if you

; don't want to do the math :)

;

; Juha Palomäki, 2013, http://juhap.iki.fi

; Left screen, top half

#Numpad7::

win:=WinExist("a") ;

WinRestore

WinMove,,,-1200,-186,1200,960

return

; Left screen, bottom half

#Numpad1::

win:=WinExist("a") ;

WinRestore

WinMove,,,-1200,774,1200,960

return

; Left screen, maximize

#Numpad4::

win:=WinExist("a")

WinMaximize

WinMove,,,-1200,-186,1200,1920

return

; Center screen, left half

; Full height is not used in order to leave space for taskbar

#Numpad8::

win:=WinExist("a")

WinRestore

WinMove,,,0,0,1280,1558

return

; Center screen, right half

#Numpad2::

win:=WinExist("a")

WinRestore

WinMove,,,1280,0,1280,1558

return

; Center screen, maximize

#Numpad5::

win:=WinExist("a")

WinMaximize

WinMove,,,0,0,2560,1558

return

; Right screen, top

#Numpad9::

win:=WinExist("a")

WinRestore

WinMove,,,2561,-185,1200,563

return

; Right screen, bottom

#Numpad6::

win:=WinExist("a")

WinRestore

WinMove,,,2561,380,1200,685

return

; Right screen, middle

#Numpad3::

win:=WinExist("a")

WinRestore

WinMove,,,2561,1066,1200,657

return